Most of data science developments are based on the analysis by machine learning and other Artificial Intelligence methods of huge amounts of data, including users’ preferences. Researchers must be aware that the analyses of users’ practices, modelling their preferences or their behaviour, require access to personal data, which carries ethical considerations as well as the need for new ways to deal with security threats. Data are at the centre of any AI development and deployment. Any trustworthy AI (see High-Level Expert Group on Artificial Intelligence, Ethics Guidelines for Trustworthy AI) unfolding from them must be (1) lawful, complying with all applicable laws and regulations (2) ethical, ensuring adherence to ethical principles and values and (3) robust, to prevent unintentional harm. These three components are strictly interrelated and require the appropriate bridges we investigate in LeADS. In addition, even the Guidelines for Trustworthy AI do not “explicitly deal with Trustworthy AI’s first component (lawful)”, a gap that LeADS research and training program will cover.

Prevention of harm is an ethical imperative for AI that requires the uttermost attention to prevent malicious uses. Consequently, security has to evolve on an ongoing basis and the security management process should be flexible enough to adapt quickly to the organization security to address these new threats. However, new attacks became more sophisticated and they required correlating all data from security events generated by each IDS in order to be detected. Consequently, Security Information and Event Management systems (SIEM) were introduced on top of IDSs to aggregate/correlate data security events and to propose dashboards. Dedicated SIEMs such as OSSIM or PRELUDE have been deployed. However, with the growing number of security events to consider, the current strategy is to implement a SIEM by using big-data solutions such as the ELK stack (ElasticSearch/Logstash, Kibana). Indeed, this strategy fits with operational scalability issues by facilitating cloud deployments and by providing rich features for data analytics and machine learning-based analysis but requires once again the dual skills LeADS produces with research and training.

Accordingly, the research component in LeADS will set the theoretical framework and the practical implementation template of a common language for co-processing and joint-controlling key notions for both data scientists and jurists working at the confluence of Artificial Intelligence and Cybersecurity. Its outcomes will produce also a comparative and interdisciplinary lexicon that draws experts from these fields to define important crossover concepts. The cross-fertilization of scientific cultures is one of LeADSs flagship characteristics, generating a much needed—and currently absent—multi-level, multi-purpose common understanding of concepts useful for future researchers, policy makers, software developers, lawyers and market actors. For instance, managing and preventing personal data breaches for a company (Data Controller), is a cost to minimize in the traditional cybersecurity paradigm, but for data subjects the same data breach is an assault to their fundamental rights. The GDPR imposes to Data Controllers to minimize the risks for data subjects’ fundamental rights, eliciting an entirely new approach to risk minimization and cybersecurity.

LeADS research objectives are twofold:

LeADS aims to develop a common language that will assist producers, service providers, and consumers in managing and contracting over their information assets and data in a less antagonistic and more unitary fashion. A Common language is required for co-processing and joint-controlling key notions for both data scientists and jurists working at the confluence of Artificial Intelligence and Cybersecurity.

LeADS is animated by the idea that in the digital economy data protection holds the keys for both protecting fundamental rights and fostering the kind of competition that will sustain the growth and “completion” of the “Digital Single Market” and the competitive ability of European businesses outside the EU. Under LeADS, the General Data Protection Regulation (GDPR) and other EU rules will dictate the transnational standard for the global data economy while training researchers able to drive the process and set an example.

The articulated research programme (detailed in the ESRs’ descriptions) will move hand in hand with the training (training itself being a component of the research). The overall research network will map the conceptual gaps among the disciplines involved and produce a clear glossary to reduce misunderstandings and impracticability of adopted technical/legal solutions.

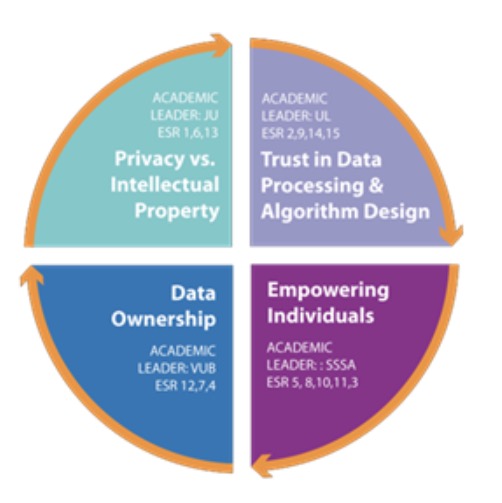

LeADS innovative theoretical model, is based on the conceptualization of deep characteristics of the data: the ease of correlating ostensibly- anonymized data to actual individuals, as addressed under different perspectives by ESRs 5, 8, 9, 11 (we call it “Un-anonymity”); the further uses of private data enabled by algorithms and machine-learning that were unexpected at time of first collection/processing (we call it “Data-Privaticity”). The interaction between un-anonymity and data privaticity can both cause direct\indirect harm, or lead to the production of private/public gains with a potential impact on various legal domains, such as competition, consumer protection, distant contracts, public and individual health, individual freedoms, etc., addressed in particular by ESRs 2, 12, 14, 15. A typical example are dark patterns: e.g. a user interface that has been carefully crafted to trick users into doing things, such as buying insurance with their purchase or signing up for recurring bills, an operation often set in motion by artificial agents using personal data analytics tools.